Self-attention scaling impacts the efficiency of Generative AI in text generation by adjusting the computational cost of attention mechanisms. As the input sequence length increases, the attention mechanism's complexity grows quadratically.

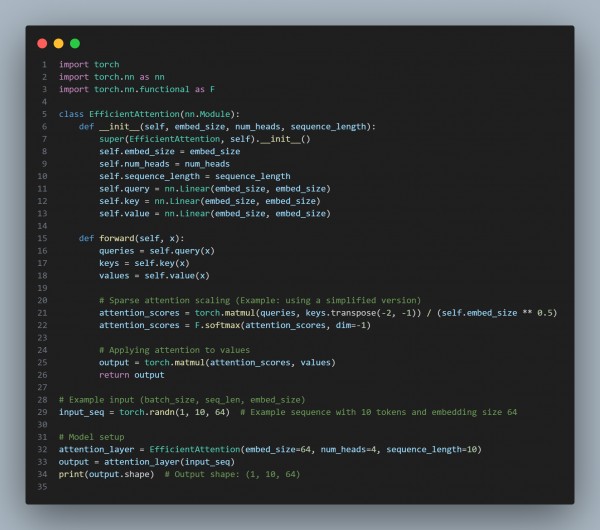

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Self-Attention Scaling: As input length grows, the self-attention mechanism's computational complexity increases quadratically.

- Efficiency Techniques: Scaling methods like sparse attention can help lower the computational cost, improving efficiency for longer sequences.

- Reduced Latency: Optimizing self-attention scaling helps reduce inference time, allowing for faster text generation.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP