Self-conditioning in Generative AI improves text generation by allowing the model to use its previous outputs as context for generating subsequent words.

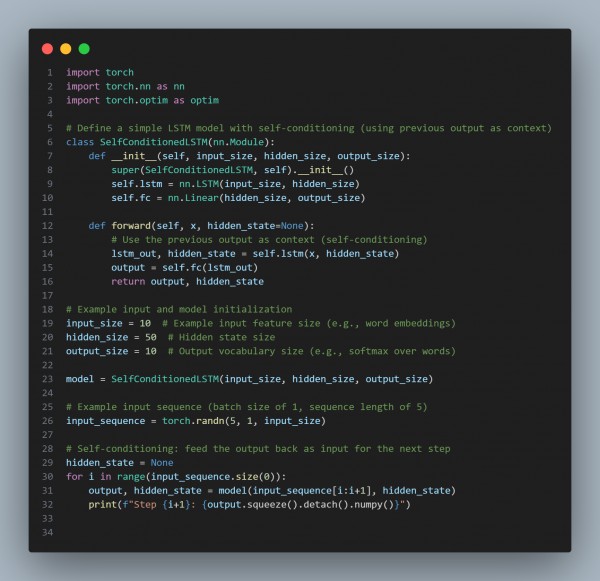

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Contextual Awareness: Self-conditioning enables the model to reference its previous outputs for better continuity.

- Improved Coherence: It helps maintain long-term dependencies in generated text sequences.

- Recurrent Output Feedback: The model feeds its previous outputs back into the next generation step, maintaining context.

- Better Text Flow: Reduces the risk of generating disjointed or inconsistent text over longer sequences.

Hence, by referring to the above, you can know How self-conditioning benefits Generative AI in recurrent text generation

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP