Dropout regularization helps prevent overfitting by randomly deactivating neurons during training, which is especially beneficial in low-resource settings by encouraging the model to generalize better to unseen data.

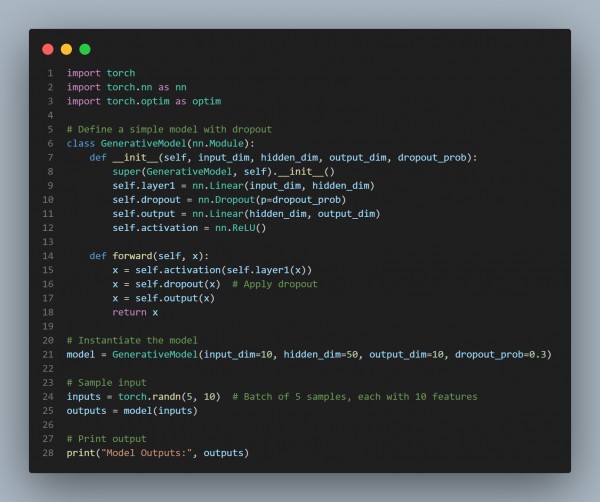

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Dropout Layer: nn.Dropout introduces stochasticity to the training process by randomly deactivating neurons.

- Prevention of Overfitting: Encourages better generalization, crucial in low-resource settings with limited data.

- Model Robustness: Maintains performance on unseen data by reducing reliance on specific neurons.

Hence, dropout regularization enhances generalization, making Generative AI models more robust and effective in low-resource settings.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP