Adaptive learning rates help optimize the convergence of Generative AI models by adjusting the learning rate during training, allowing the model to learn more efficiently on large datasets by avoiding overshooting or slow convergence.

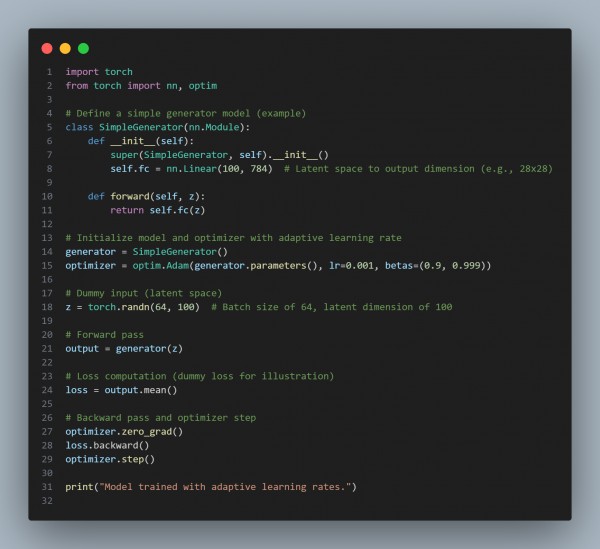

In the above code, we are using the following key points:

- Dynamic Learning Rate: Adaptive learning rates adjust based on gradients, improving model training efficiency.

- Faster Convergence: This helps the model converge more quickly, especially with large datasets.

- Prevents Overfitting/Underfitting: Adaptive methods like Adam adjust the learning rate to avoid these issues.

- Scalability: Makes it easier to train complex models on large datasets by optimizing parameter updates.

Hence, by referring to the above, you can see how adaptive learning rates impact the convergence of Generative AI in large datasets.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP