The embedding sparsity significantly impacts memory and efficiency in large generative models in the following ways:

- Memory Efficiency: Sparse embeddings reduce memory usage by storing fewer non-zero values.

- Computational Efficiency: Sparse operations (e.g., matrix multiplications) reduce the number of computations, speeding up training and inference.

- Regularization: Sparsity acts as a form of regularization, potentially improving generalization.

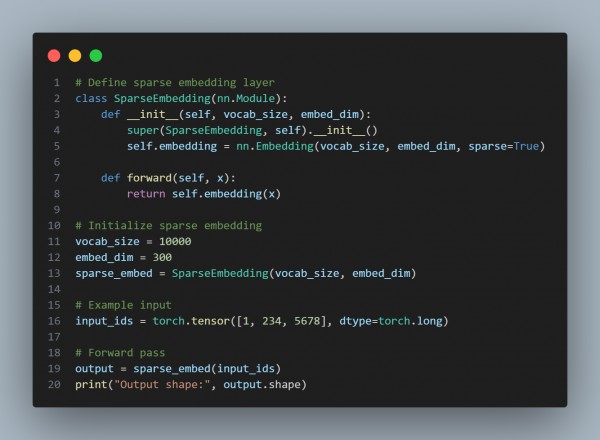

Here is the code you can refer to:

In the above code, we are using Memory to sparse embeddings to save memory by storing only significant weights; efficiency to parse operations improves speed, especially for large vocabularies, and Use Cases are Useful in large-scale models like transformers to optimize resource usage.

Hence, these are the impacts of embedding sparsity on memory and efficiency in large generative models.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP