Challenges and solutions for data tokenization in multi-lingual generative models are as follows:

Challenges in Multi-lingual Tokenization:

- Vocabulary Size: Handling large vocabularies for diverse languages leads to memory and efficiency issues.

- Rare Tokens: Languages with fewer training examples produce many out-of-vocabulary (OOV) tokens.

- Script Variability: Different scripts (e.g., Latin vs. Cyrillic) require flexible tokenization strategies.

- Consistency: Tokenization inconsistencies across languages impact model performance.

Solutions for that:

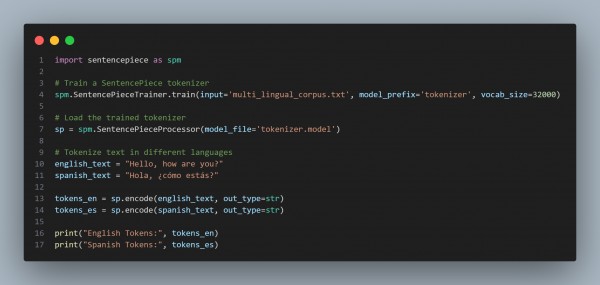

- Subword Tokenization: It uses algorithms like Byte Pair Encoding (BPE) or SentencePiece to generate subword units shared across languages.

- Shared Vocabulary: Train a common vocabulary to leverage cross-lingual transfer.

- Language Tags: It Adds language-specific tokens (e.g., <en> for English) to guide the model.

The outcome of the above code would be that subword tokenization handles OOV words efficiently, and shared vocabulary supports cross-lingual understanding.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP