You can build a custom embedding model using SentenceTransformers by defining your own transformer backbone and pooling strategy.

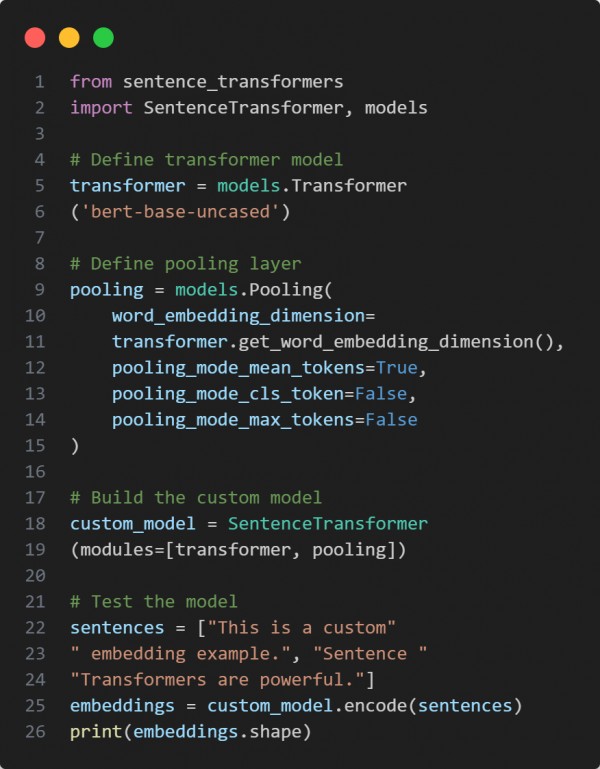

Here is the code snippet below:

In the above code we are using the following key points:

-

models.Transformer to load a base transformer like BERT.

-

models.Pooling to define how sentence embeddings are aggregated.

-

SentenceTransformer to combine transformer and pooling into one model.

-

.encode() to generate embeddings for input sentences.

Hence, this approach enables flexible creation of sentence embedding models tailored to specific tasks or datasets.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP