You can implement a Byte-Level Tokenizer from scratch using SentencePiece by training a model with the --byte_fallback option to ensure byte-level granularity.

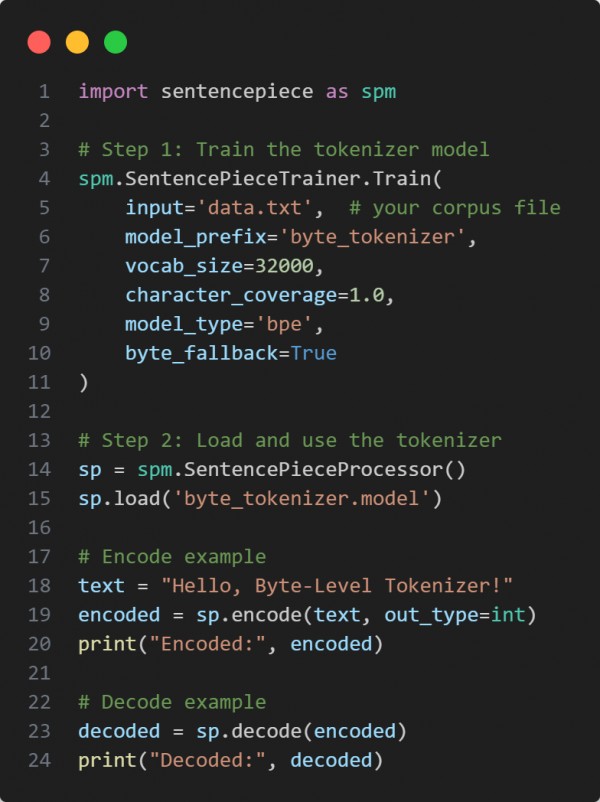

Here is the code snippet below:

In the above code we are using the following key points:

-

SentencePieceTrainer for training a byte-level tokenizer.

-

byte_fallback=True ensures that unseen characters are broken down into bytes.

-

Loading and encoding/decoding with SentencePieceProcessor for practical use.

Hence, this method builds a tokenizer that can handle any input robustly at the byte level, making it well-suited for diverse LLM tasks.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP