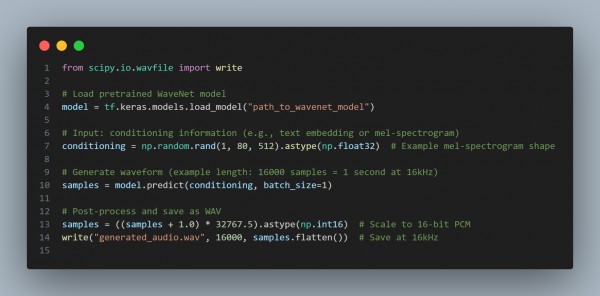

You can refer to the Short script below to generate audio waveforms using a pre-trained WaveNet model with TensorFlow and Triton (e.g., NVIDIA’s pre-trained model):

In the above code, we are using Pretrained WaveNet to load a compatible model that supports desired input (mel-spectrogram or text embeddings), Input Conditioning, which provides the necessary input to the model (adjust shape and format as per your model), and Post-processing that scales the output waveform to 16-bit PCM for saving as a WAV file.

Hence, this shows the generation of a simple audio waveform. Adjust conditioning for specific tasks like speech synthesis.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP