Bias amplification in text generation models can be prevented through techniques like balanced data sampling, debiasing algorithms, controlled text generation, and post-hoc filtering.

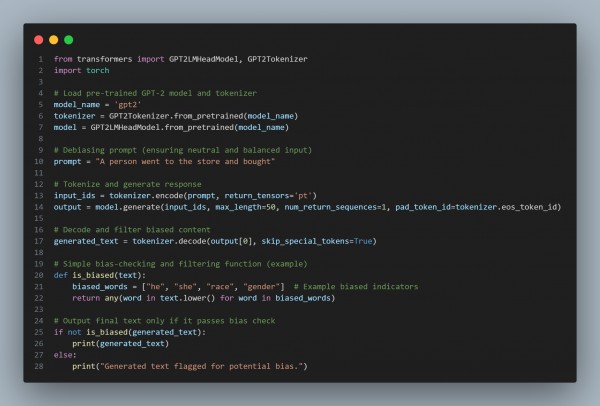

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Uses neutral and balanced prompts to avoid introducing bias from the start.

- Implements a simple post-hoc filtering function to flag potentially biased language.

- Controls output length and skips special tokens for clean, focused text generation.

Hence, by combining balanced input, controlled generation, and post-hoc filtering, we minimize the risk of bias amplification in text generation models.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP