You can easily implement Zero-short learning in text generation using models like GPT by referring to below:

- Prompt Engineering: Craft specific prompts that guide the model in performing new tasks, increasing its broad pre-trained knowledge.

- Task Descriptions: Include detailed task descriptions or instructions in the prompt to clarify the desired output format and context.

- Contextual Examples: Provide a few in-context examples (like few-shot prompts) of similar tasks, even if unrelated, to guide the model's generation style.

- Domain Adaption: For domain-specific tasks, add context-relevant keywords or phrases in the prompt to make the output more accurate.

- Evaluation and Refinement: Continuously test and refine prompts based on output quality.

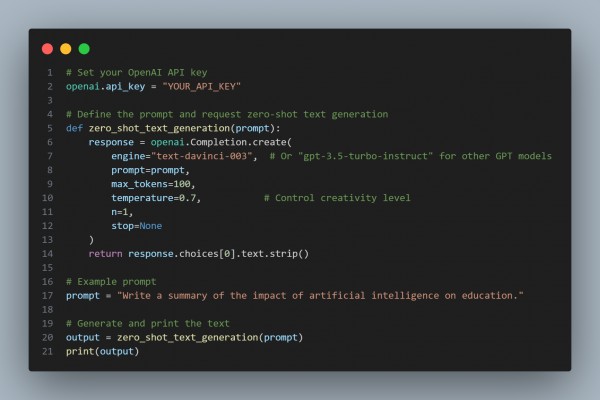

Here is a code snippet showing the implementation of zero-shot text generation using OpenAI's GPT model (such as GPT-3.5 or GPT-4) with zero-shot capabilities. This code uses the open library, assuming you have an API key. The model generates text based on a prompt without fine-tuning specific tasks.

By implementing the above strategies, you can easily implement zero-shot learning in text generation using models like GPT.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP