Multi-modal input data can be handled by aligning shared representations of different data types (like text and images) in a common latent space, enabling cross-modal generation and synthesis.

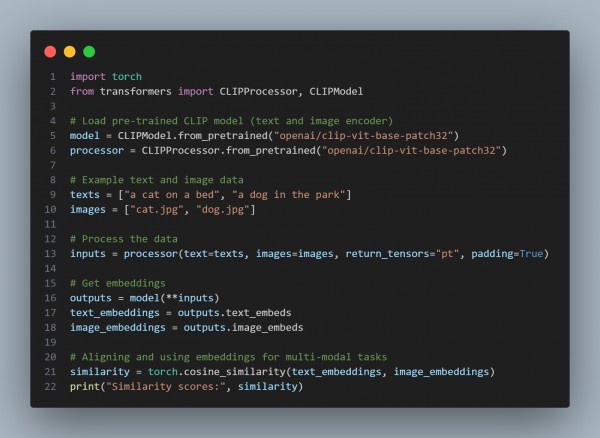

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Uses the CLIP model for multi-modal learning, aligning text and image representations.

- Processes both text and image inputs and converts them into embeddings.

- Measures similarity between text and image embeddings for cross-modal understanding.

Hence, using a shared latent space for text and image embeddings enables effective cross-modal learning and synthesis, as demonstrated by the CLIP model.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP