To reduce computational complexity when training deep learning models on large datasets, use batch normalization, mixed precision training, gradient accumulation, data augmentation, transfer learning, efficient architectures (MobileNet, EfficientNet), and distributed training (TPUs, multi-GPU, Horovod).

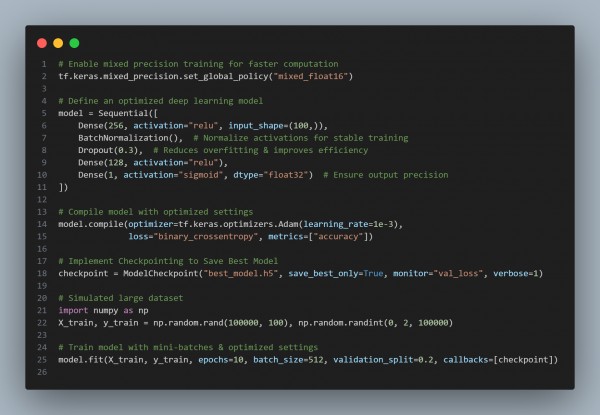

Here is the code snippet given below:

In the above code we are using the following techniques:

-

Enable Mixed Precision Training (mixed_float16)

- Uses lower precision (FP16) for faster training, reducing memory footprint.

-

Use Mini-Batch Training (batch_size=512)

- Trains using smaller subsets of data, optimizing GPU/TPU memory usage.

-

Apply Batch Normalization (BatchNormalization())

- Normalizes activations to accelerate convergence and stabilize gradients.

-

Leverage Transfer Learning (MobileNetV2, EfficientNet)

- Pretrained models reduce training time on large datasets by using learned features.

-

Use Checkpointing (ModelCheckpoint)

- Saves only the best model, preventing redundant training epochs.

Hence, optimizing batch size, using mixed precision, batch normalization, efficient architectures, and distributed training significantly reduces computational complexity while training deep learning models on large datasets.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP