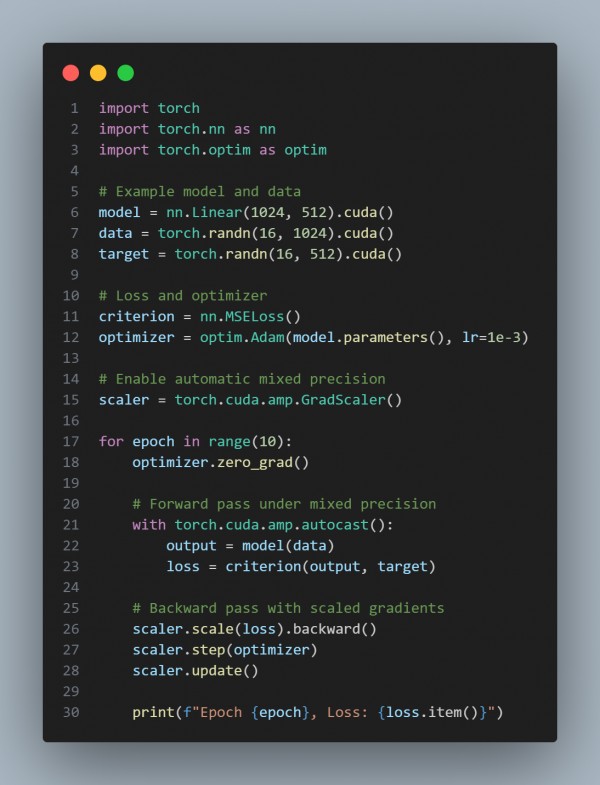

You can use FP16 half-precision training with PyTorch to reduce memory usage for large models. Here’s how to use FP16 half-precision training with PyTorch to reduce memory usage for large models by using torch.cuda.amp (Recommended for Automatic Mixed Precision). A code snippet below shows how to do it.

In the above code we are using torch.cuda.amp.autocast() to ensures computations run in FP16 where possible for performance gains, GradScaler to dynamically scales gradients to prevent underflow during FP16 training and Memory Savings so that FP16 reduces memory usage by 2x for model weights and activations.

This approach is optimal for large models and can be combined with techniques like gradient checkpointing for further memory efficiency.

Hence, this approach allows you to use FP16 half-precision training with PyTorch to reduce memory usage for large models.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP