Techniques you can use to reduce training time for large language models without sacrificing performance are as follows:

-

Gradient Accumulation:

Allows training with an effective large batch size without requiring more GPU memory.

-

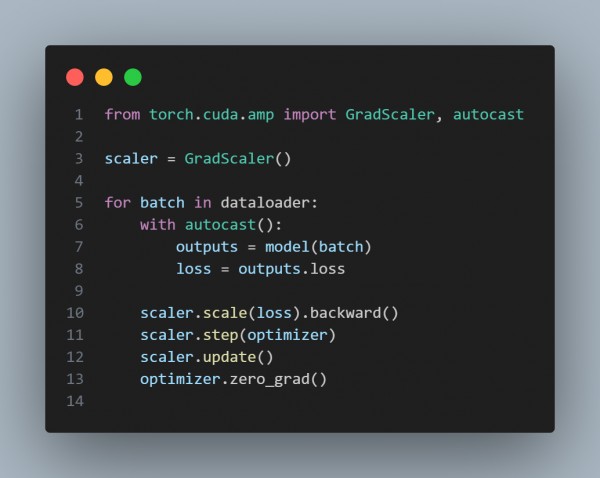

Mixed-Precision Training:

Significantly reduces memory usage and speeds up computations with minimal loss in performance.

-

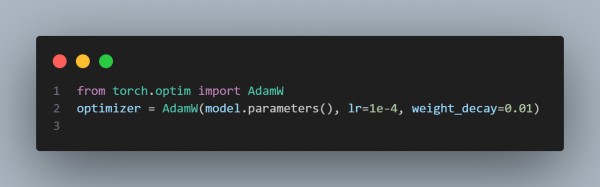

Efficient Optimizers (e.g., AdamW):

AdamW improves convergence by properly handling weight decay.

-

Learning Rate Schedulers:

Dynamically adjust learning rates to improve convergence speed.

-

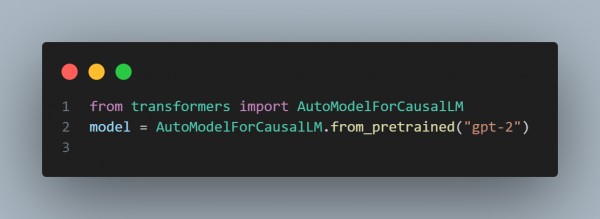

Pretrained Models:

Fine-tune smaller pre-trained models instead of training from scratch.

-

Distributed Training:

Use multiple GPUs or nodes to parallelize training.

-

Gradient Clipping:

Prevent exploding gradients to stabilize training.

-

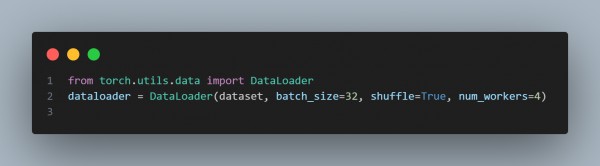

Efficient Data Loading:

Optimize data pipeline with DataLoader for faster throughput.

Hence, by employing techniques like gradient accumulation, mixed-precision training, distributed training, and efficient optimizers, you can significantly reduce the training time of large language models while maintaining or even improving their performance. The key is to balance computational efficiency with effective model optimization strategies.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP