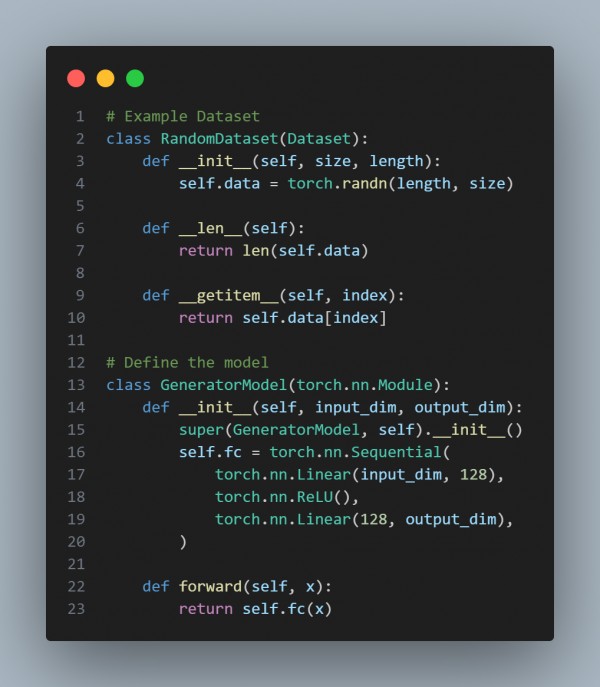

You can implement multi-GPU training in PyTorch for large-scale generative models by referring to the code below:

In the above code, we are using DataParallel, which wraps the model with torch.nn.DataParallel enables multi-GPU training, Device Handling moves model and data to Cuda for GPU utilization, and Scalability is Suitable for training large-scale generative models with significant compute needs.

Hence, this approach scales the workload across multiple GPUs efficiently.

Related Post: How to scale inference for large generative models across cloud infrastructure

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP