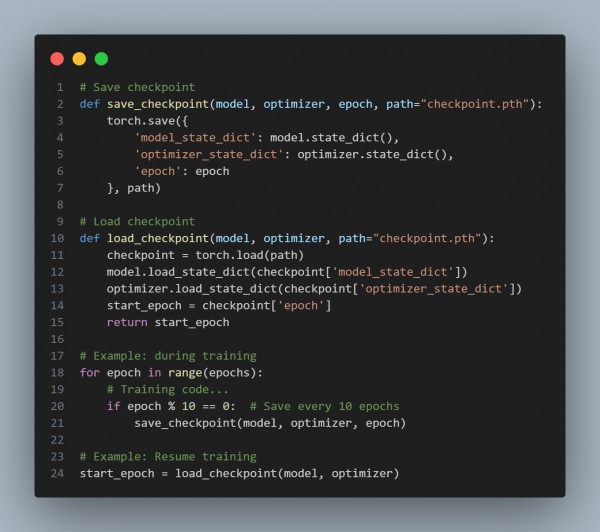

To implement model checkpointing in PyTorch for Generative AI models, you can save and load model weights, optimizer states, and other relevant data during training. Here is the code given below:

In the above code, we are using state_dict: Stores model and optimizer parameters, Epoch Tracking, which saves and resumes training from the last saved epoch and uses a file naming convention (checkpoint_epoch.pth) for better management.

Hence, this ensures you can pause and resume training without losing progress.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP