For efficient cross-entropy loss calculation with large token vocabularies, You can refer to the following:

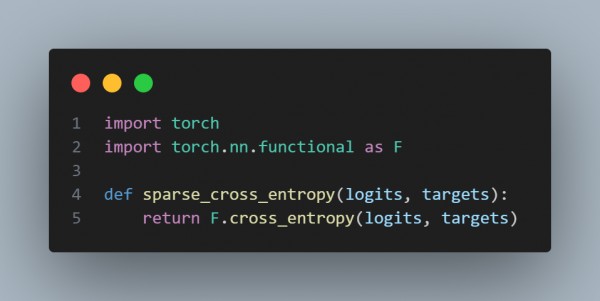

- Sparse Softmax Cross-Entropy: You can avoid computing softmax probabilities for the entire vocabulary by focusing only on the target tokens.

-

Negative Sampling: Instead of calculating probabilities for all tokens, use sampled negatives for approximation (e.g., in Word2Vec).

-

Softmax Approximation: For large vocabularies, techniques like hierarchical softmax or noise contrastive estimation (NCE) can be used.

-

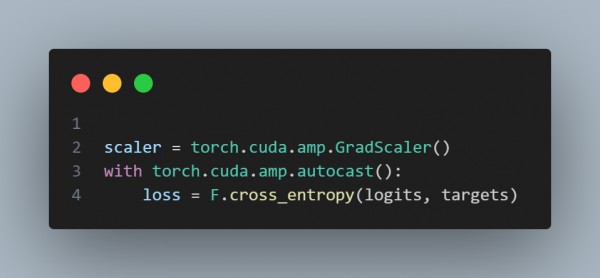

Mixed Precision Training: Use torch.cuda.amp for lower precision (e.g., float16) to speed up operations.

- Logits Masking: Mask irrelevant tokens to reduce unnecessary computations in specific scenarios.

In the above techniques, Sparse softmax cross-entropy and softmax approximations like NCE are highly efficient for large token vocabularies.

Hence, using these techniques will allow you efficient cross-entropy loss calculation when working with large token vocabularies.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP