To build efficient caching mechanisms for frequent model inference requests, You can refer to following :

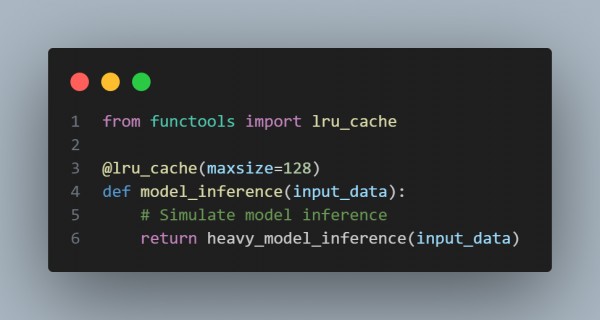

In-Memory Caching (e.g., functools.lru_cache)

- Automatically caches results of function calls.

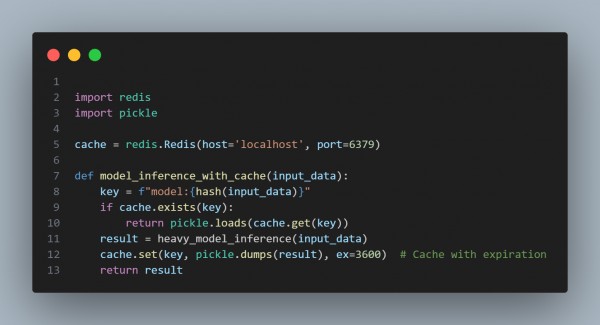

Redis Caching

- Leverages an external cache for scalability.

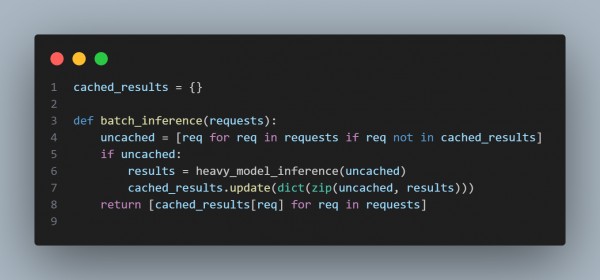

Batch Inference with Caching

- Groups request to reduce redundant computations.

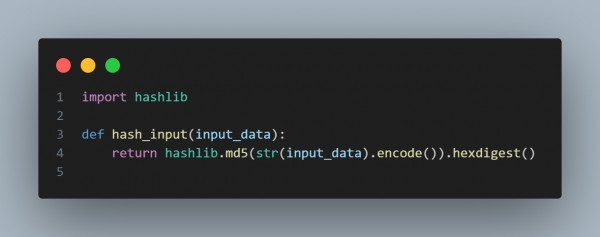

Hashing Inputs

- Cache results using hashable representations of inputs to avoid recomputation.

In the code above, we are Using in-memory caching for local use, Redis for distributed setups, and batching to minimize repetitive calls.

Hence, using these techniques will help you build efficient caching mechanisms in code to speed up frequent model inference requests.

Related Post: How to use cache or pre-compute frequently generated responses to reduce model load

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP