You can optimize an LLM for mobile inference by pruning redundant attention heads to reduce computational complexity while retaining core model performance.

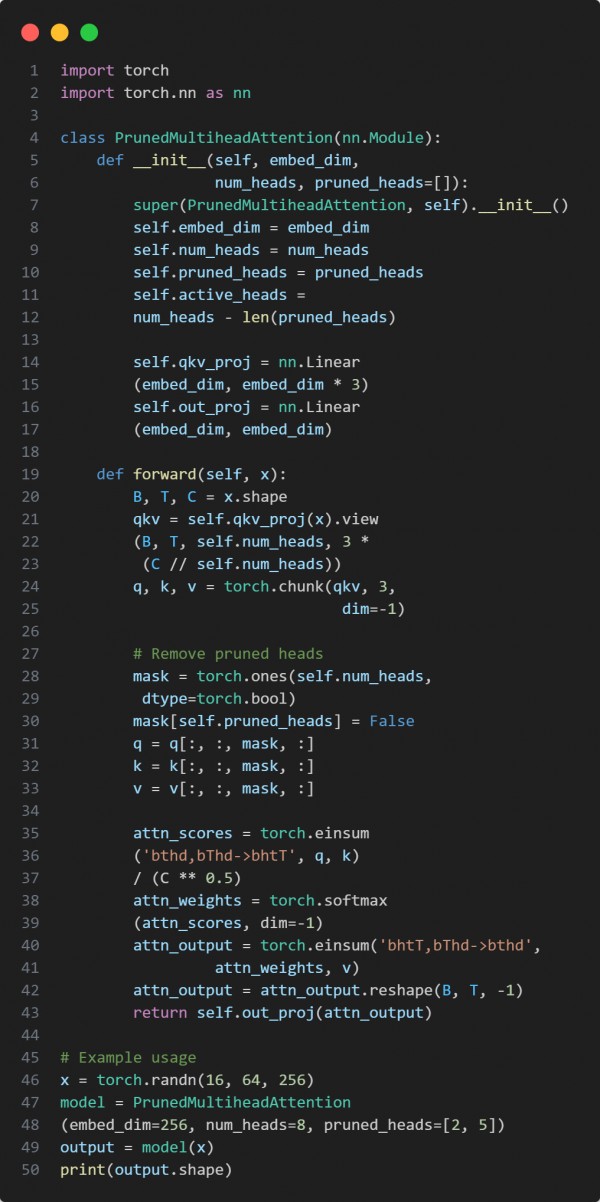

Here is the code snippet below:

In the above code we are using the following key points:

-

Selectively removes specified attention heads at runtime.

-

Adjusts QKV tensors dynamically based on active heads.

-

Maintains full attention logic for the remaining heads.

Hence, pruning attention heads allows significant optimization of LLMs for mobile and edge deployment without major performance loss.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP