You can convert a PyTorch-based LLM to ONNX and optimize it for deployment using torch.onnx.export followed by onnxruntime optimization tools.

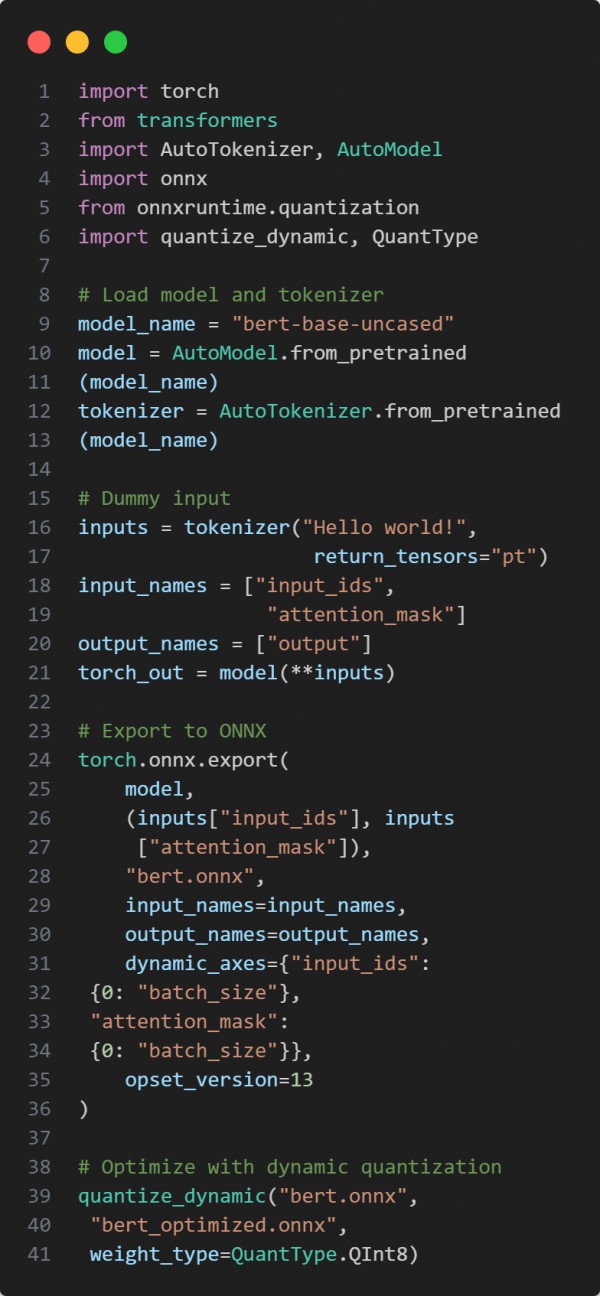

Here is the code snippet below:

In the above code, we are using the following key points:

-

torch.onnx.export for exporting a PyTorch model to ONNX format.

-

Dynamic axes for flexible batch size support in deployment.

-

ONNX Runtime quantization to optimize performance and reduce model size.

Hence, this conversion pipeline prepares a PyTorch model for fast and scalable production inference using ONNX.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP