You can use PEFT techniques instead of full model fine-tuning when you want efficient adaptation of large models with limited compute and storage resources.

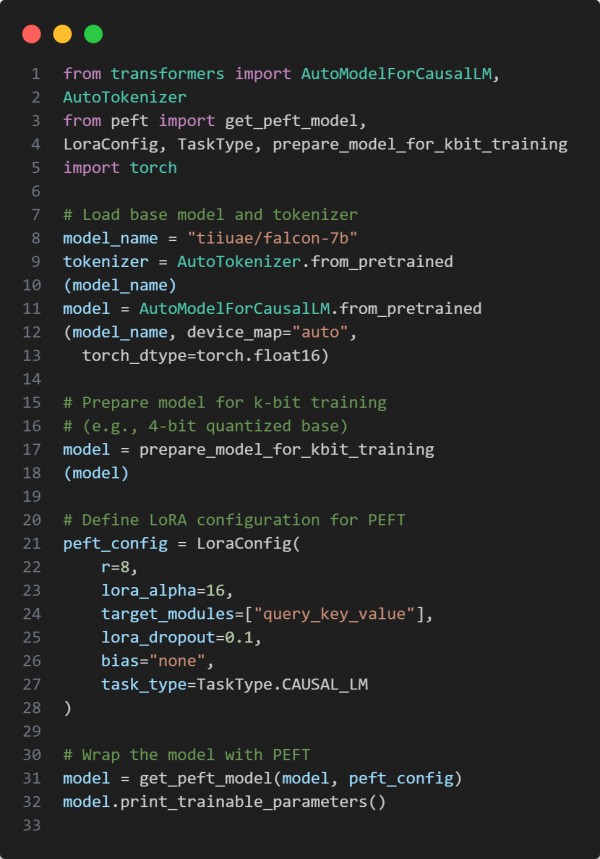

Here is the code snippet below:

In the above code, we are using the following key points:

-

prepare_model_for_kbit_training enables low-bit precision training to reduce memory.

-

LoraConfig defines LoRA-specific hyperparameters.

-

get_peft_model wraps the base model with trainable PEFT adapters.

Hence, PEFT is ideal when customizing large models under resource constraints without retraining all parameters.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP