You can transfer knowledge from a monolingual model to a multilingual LLM by distilling task-specific representations from the source model into the multilingual target using aligned datasets.

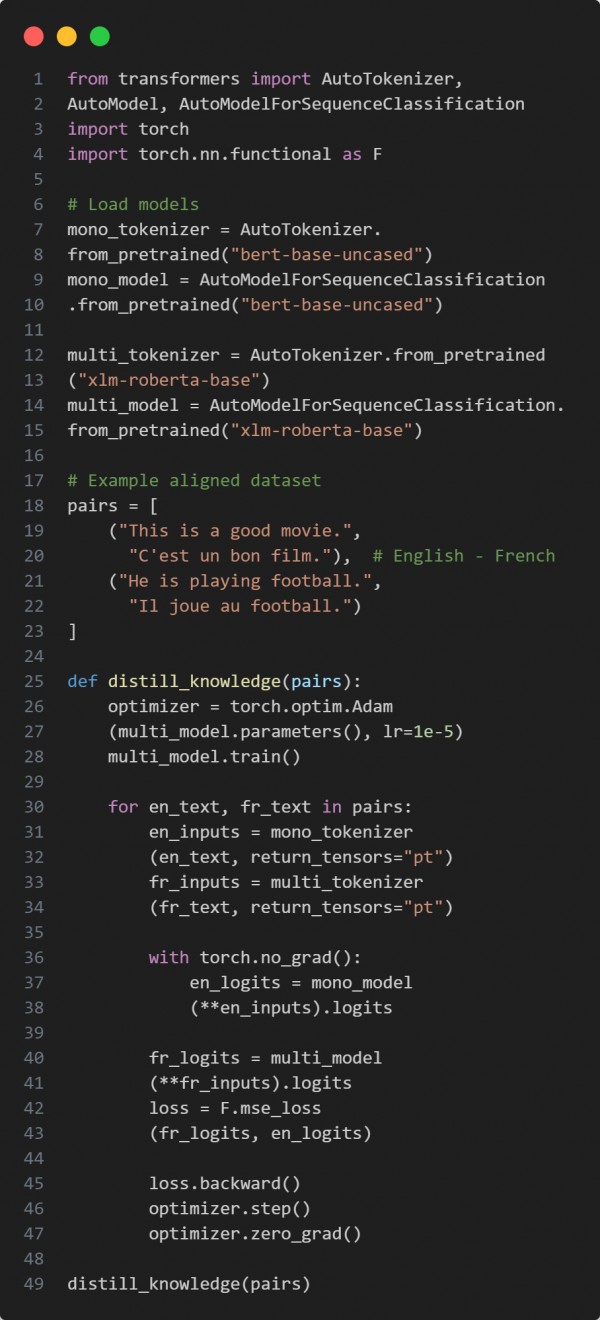

Here is the code snippet below:

In the above code we are using the following key points:

-

Parallel aligned sentence pairs for knowledge transfer.

-

The monolingual model acts as a teacher, multilingual as a student.

-

MSE loss is used to align logits across languages.

Hence, distillation enables effective knowledge transfer from monolingual to multilingual models using shared semantic examples.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP