You can deploy a foundation model like GPT or BERT on AWS Lambda with ONNX optimization by converting the model to ONNX, packaging it, and using a Lambda function for inference.

Here is the steps you can refer to:

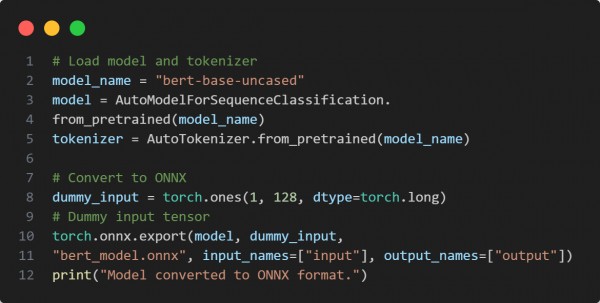

1. Convert the Model to ONNX

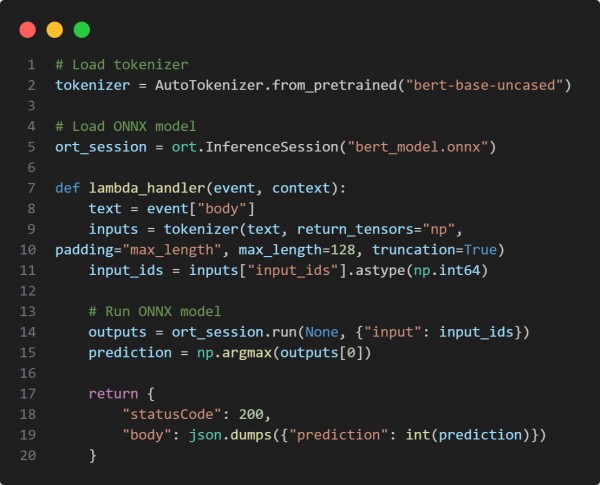

2. AWS Lambda Inference Script (handler.py)

3. Deploy to AWS Lambda

-

Package handler.py, bert_model.onnx, and dependencies (onnxruntime, transformers).

-

Create a Lambda function, upload the package, and configure API Gateway for HTTP requests.

In the above code, we are using the following key points:

-

ONNX Optimization (torch.onnx.export(...)): Speeds up inference.

-

Lightweight Deployment (onnxruntime): Enables Lambda compatibility.

-

Tokenization in Lambda (tokenizer(text, return_tensors="np")): Converts text input for ONNX.

-

AWS API Gateway Integration: Allows external requests.

Hence, deploying an ONNX-optimized model on AWS Lambda ensures fast and scalable inference.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP