Use techniques like top-k sampling, top-p (nucleus) sampling, and temperature control to reduce repetitive token generation in language models for creative writing.

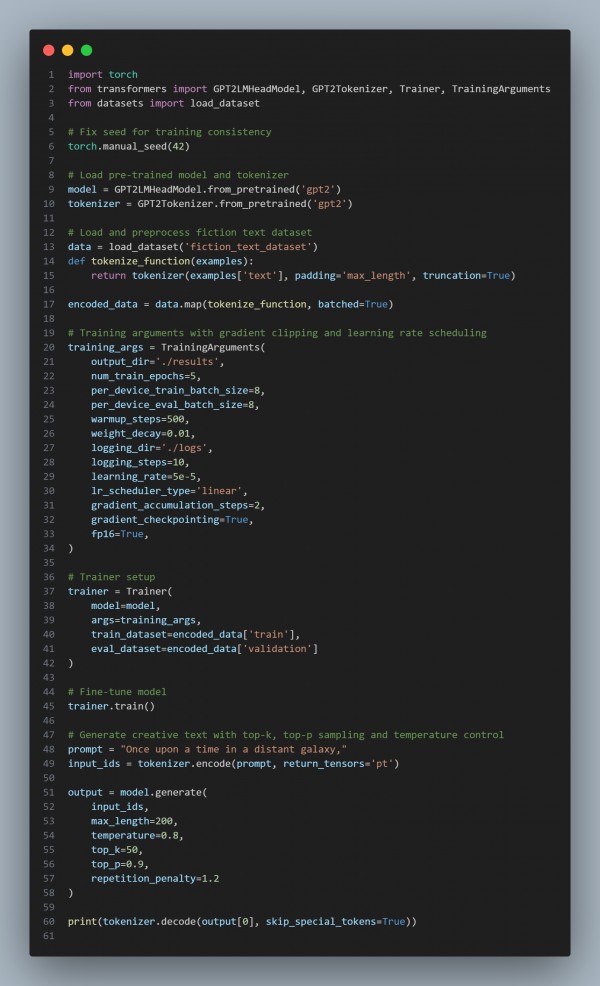

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Uses temperature control (0.8) to balance creativity and coherence.

- Applies top-k sampling (50) to limit high-probability token choices.

- Uses top-p sampling (0.9) for more dynamic token selection.

- Adds repetition penalty (1.2) to discourage repetitive outputs.

Hence, these techniques together foster diverse, creative, and less repetitive text generation.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP