Underperformance in text generation models can be resolved by fine-tuning on high-quality domain-specific data, optimizing hyperparameters, using better decoding strategies, and applying techniques like reinforcement learning or knowledge distillation.

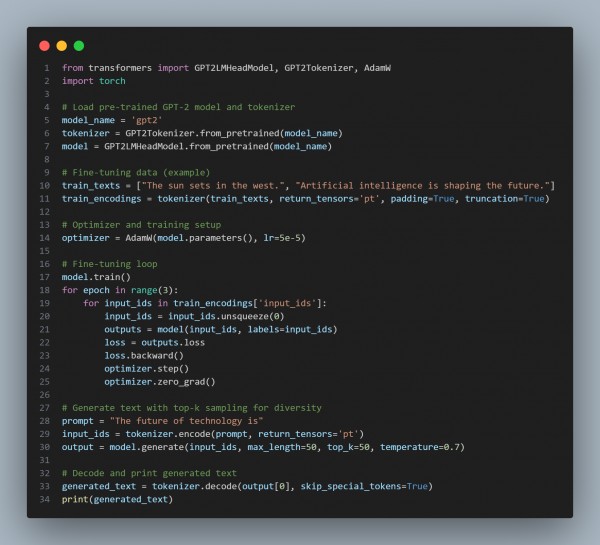

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Fine-tunes the pre-trained GPT-2 model on domain-specific data to improve task performance.

- Uses AdamW optimizer for efficient training and weight updates.

- Applies top-k sampling and temperature control to generate more diverse and fluent outputs.

Hence, by fine-tuning with quality data, optimizing training strategies, and using advanced decoding methods, we significantly enhance the language model’s performance in text generation tasks.

Related Post: How to handle out-of-vocabulary words or tokens during text generation in GPT models

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP