Poor alignment between generated text and input context can be resolved by improving training data quality, using context-rich prompts, fine-tuning with supervised learning, and applying attention-based techniques to maintain context relevance.

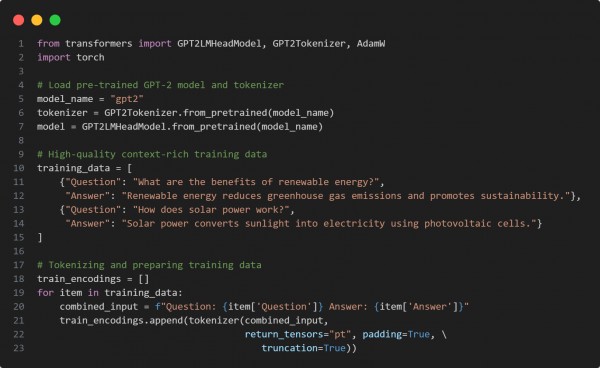

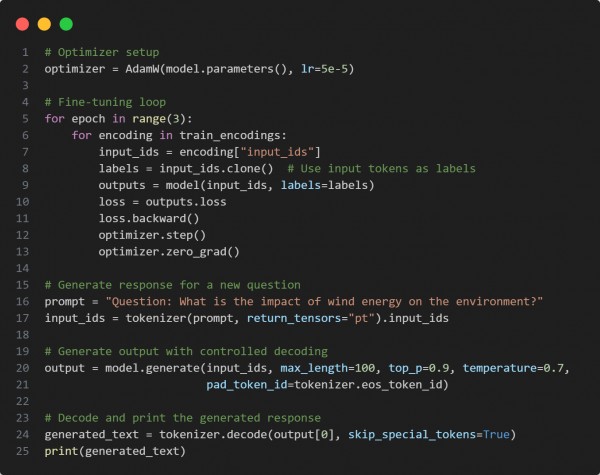

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Uses context-rich training data to improve alignment between input and generated output.

- Combines questions and answers in training to reinforce context retention.

- Applies top-p sampling and temperature control for balanced and relevant text generation.

Hence, by fine-tuning on high-quality, context-rich data and using advanced decoding strategies, we improve the alignment and consistency of generated text with input context.

Related Post: GPT models on custom datasets

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP