You can fix memory consumption issues in a GPT-based model by using techniques like gradient checkpointing, mixed precision training, and efficient batch sizing.

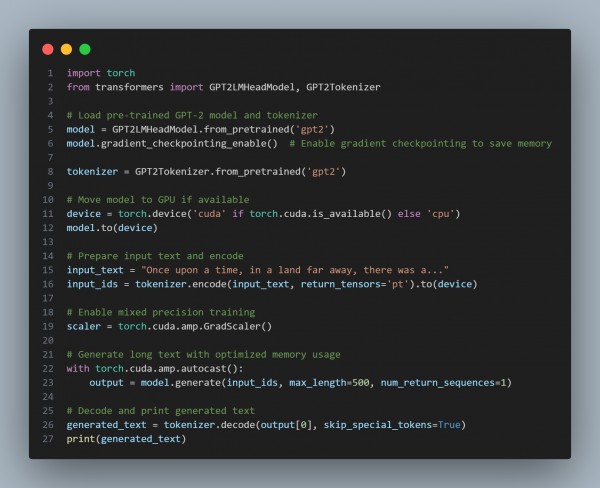

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Uses gradient checkpointing to reduce memory usage during backpropagation.

- Enables mixed precision training (autocast()) for lower memory footprint and faster computation.

- Moves model and data to GPU when available for efficiency.

Hence, optimizing memory usage in a GPT-based model with techniques like gradient checkpointing and mixed precision training enables long-text generation without running into out-of-memory issues.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP