You can address multicollinearity in Linear Regression by using Ridge regression in Scikit-learn, which applies L2 regularization to reduce feature coefficient sensitivity.

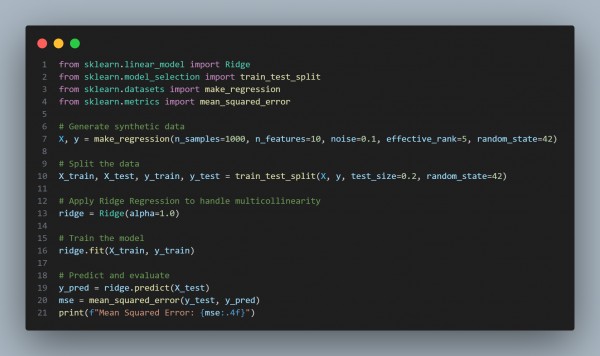

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Ridge(alpha=1.0) applies L2 regularization, shrinking coefficients to mitigate multicollinearity.

- make_regression() with effective_rank=5 simulates multicollinear features.

- mean_squared_error() evaluates the model’s predictive accuracy.

- alpha controls the regularization strength — higher values increase penalty on large coefficients.

Hence, Ridge regression effectively reduces the impact of multicollinearity by stabilizing coefficient estimates, improving model generalization and performance.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP