To fix training instability when working with a VAE in a semi-supervised learning task, you can follow the following key points:

- Use a Weighted Loss: Incorporate a weighted loss for the labeled data to ensure it dominates the loss function.

- Regularization: Add a KL divergence regularization term to ensure smooth latent space learning.

- Adjust Learning Rate: Decrease learning rate or use adaptive learning rate methods (e.g., Adam optimizer).

- Batch Normalization: Use batch normalization in the encoder/decoder to stabilize training.

- Semi-Supervised Loss: Combine unsupervised reconstruction loss with supervised classification loss for labeled data.

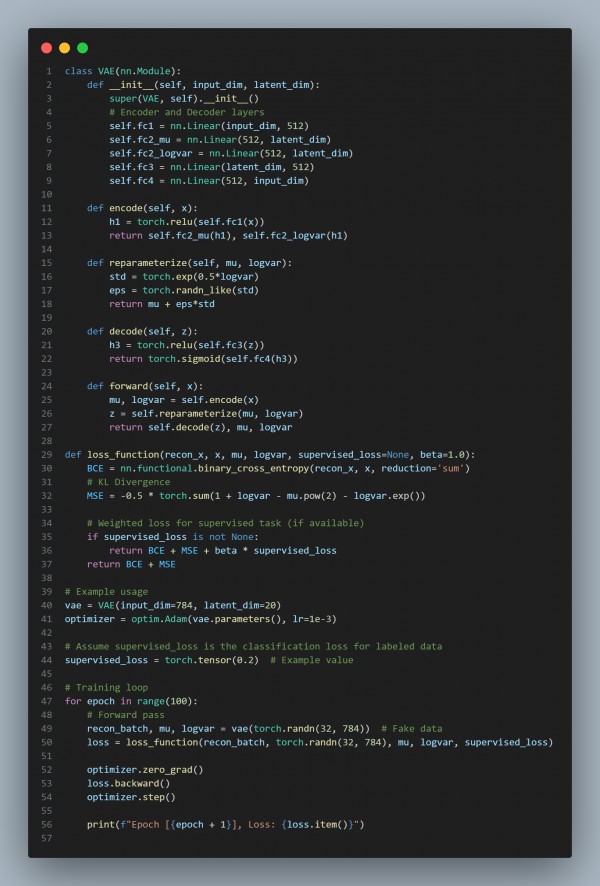

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Weighted Loss: Balances reconstruction and supervised classification tasks.

- KL Divergence: Regularizes the latent space to prevent overfitting.

- Adaptive Optimizer: This tool uses Adam to manage learning rates dynamically.

Hence, these steps help stabilize training in semi-supervised VAE tasks by ensuring a balance between labeled and unlabeled data.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP