To train a multimodal model in Keras that combines text and images, create separate CNN (for images) and LSTM/Transformer (for text) encoders, concatenate their feature embeddings, and train a joint model for classification or regression tasks.

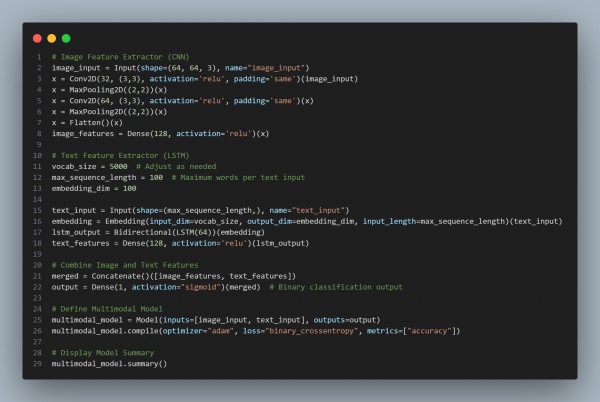

Here is the code snippet given below:

In the above code we are using the following techniques:

-

Uses Separate CNN & LSTM for Feature Extraction:

- CNN processes images, while LSTM extracts sequential dependencies from text data.

-

Embeds Text Features Using an Embedding Layer:

- Converts text into dense vector representations before passing it to LSTM.

-

Merges Features Using Concatenate() Layer:

- Combines image and text embeddings for joint learning.

-

Supports Custom Architectures (Transformers, ResNet, BERT):

- Replace LSTM with BERT/Transformer and CNN with ResNet/Inception for better results.

-

Trains on Multimodal Data for Better Predictions:

- Useful for image-captioning, visual question answering, and medical AI.

Hence, Keras enables multimodal learning by fusing CNN (for images) and LSTM/Transformers (for text), allowing models to understand and generate predictions based on multiple data modalities.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP