Multi-task pre-training enhances generative AI by exposing the model to various tasks, enabling it to learn shared representations that generalize across domains.

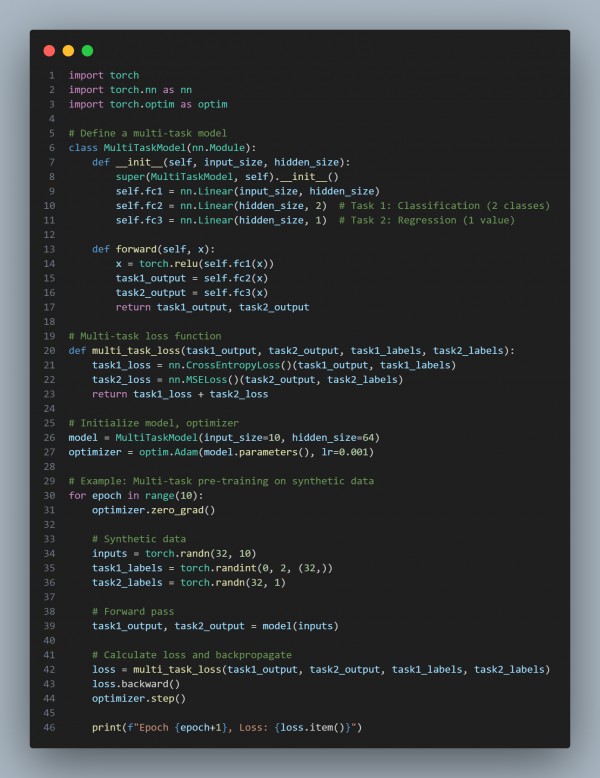

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Multi-task Model: The model has two separate output layers for different tasks: classification and regression.

- Loss Function: A combined loss function optimizes the model for both tasks simultaneously, encouraging shared representations.

- Cross-domain Generalization: By training on multiple tasks, the model learns to generalize across domains, improving its performance on previously unseen tasks.

Hence, multi-task pre-training improves cross-domain generalization by enabling the model to learn shared representations, making it effective across diverse tasks.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP