Loss weighting strategies allow for controlling the impact of different tasks in a multi-task learning scenario. By adjusting the weights assigned to each loss function, you can prioritize certain tasks over others, helping the model converge more effectively and balancing trade-offs between task performance.

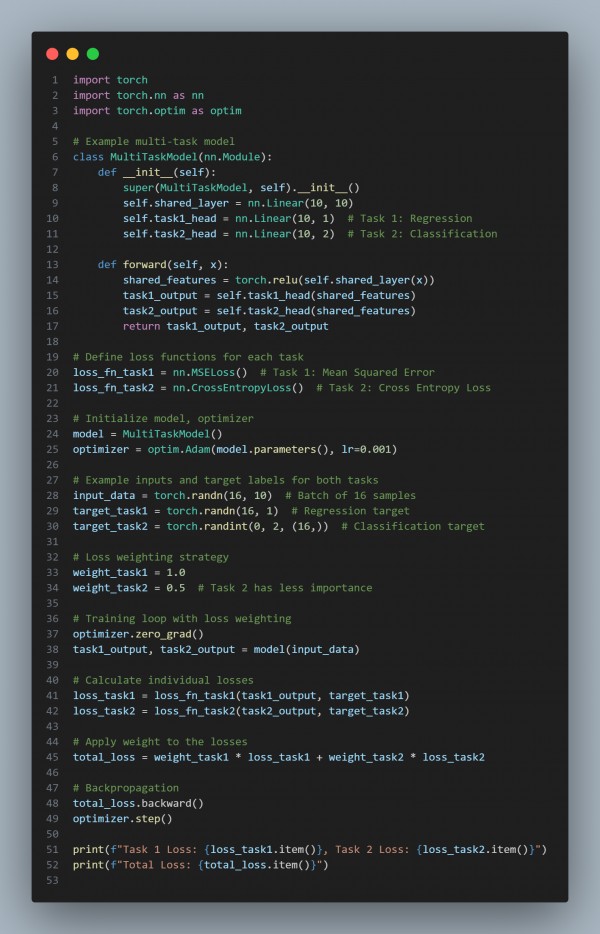

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Loss Balancing: Loss weighting helps prevent overfitting to one task, improving overall model performance across multiple objectives.

- Task Prioritization: Adjusting weights allows you to prioritize tasks that are more important or harder to learn.

- Improved Convergence: Proper loss weighting leads to more stable and faster convergence in multi-task learning scenarios.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP