Token masking trains the model to predict missing or corrupted tokens, making it robust to noisy inputs by simulating real-world imperfections during training.

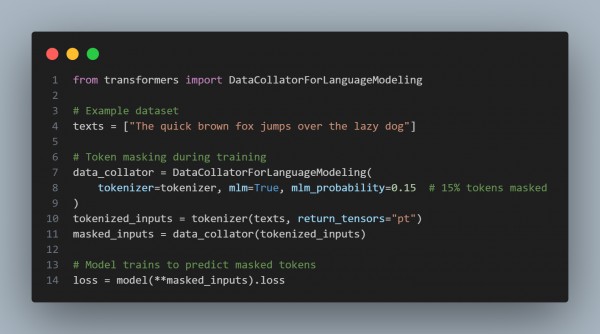

Here is the code snippet you can refer to:

In the above code we are using the following:

- Masked Language Modeling (MLM): mlm=True enables token masking.

- Masking Probability: mlm_probability=0.15 masks 15% of the tokens.

- Robust Training: Model predicts masked tokens, learning to handle noisy or incomplete inputs.

Hence, token masking enhances robustness by training models to infer missing or corrupted information, improving performance on noisy real-world data.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP