Token prediction anomalies in a text-to-image generator can be addressed by using better tokenization strategies, fine-tuning on high-quality aligned datasets, applying regularization techniques, and monitoring loss convergence during training.

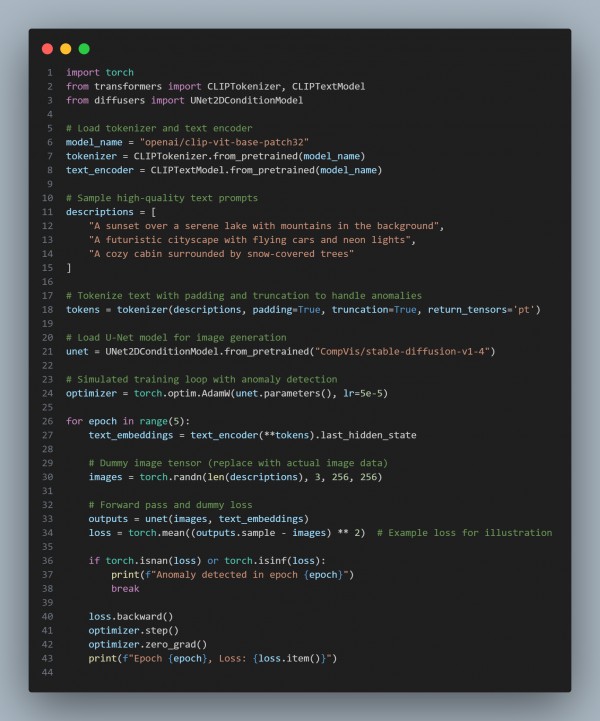

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Uses well-structured, high-quality text descriptions to minimize ambiguous tokens.

- Applies robust tokenization with padding and truncation to prevent misalignment.

- Implements anomaly detection for loss values (NaN or Inf) during training.

Hence, by using thoughtful tokenization, high-quality input data, and monitoring model behavior, we mitigate token prediction anomalies and improve text-to-image generation stability.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP