To reduce output latency in Generative AI chatbots, techniques like model optimization (e.g., quantization, pruning), caching common responses, and using more efficient model architectures (e.g., distilled models) can be applied to speed up inference time.

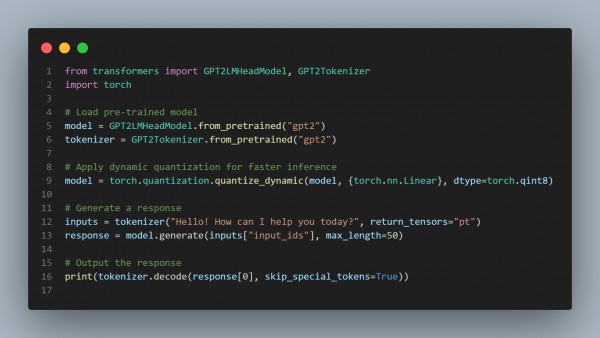

You can refer to the following code snippet below:

In the above code, we are using the following key points:

- Model Quantization: Reduces model size and speeds up inference.

- Pruning: Removes unnecessary weights to reduce computation time.

- Efficient Models: Use distilled or smaller models for faster responses.

Hence, by referring to the above, you can reduce output latency in Generative AI-based chatbots.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP