You can implement stochastic weight averaging for robust generative model training. Weight Averaging (SWA) improves model robustness by averaging weights over multiple checkpoints during training.

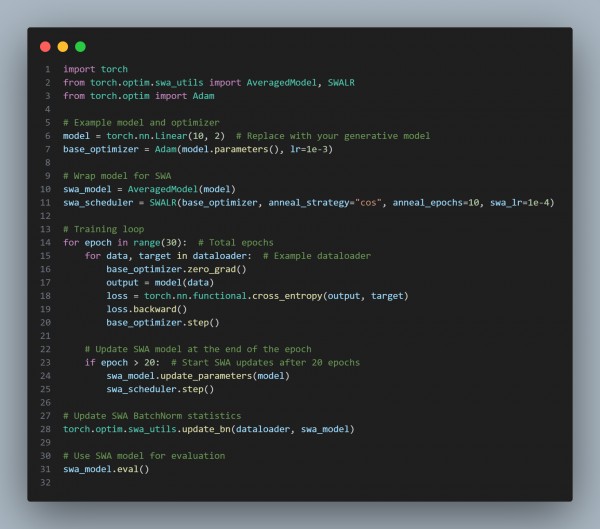

Here is the code snippet which you can refer to:

In the above code, we are using the following key points:

- AveragedModel: Maintains the average of model weights.

- SWALR: Adjusts the learning rate for SWA-specific training.

- update_bn: Updates batch normalization statistics after SWA training.

Hence, by referring to the above, you can implement stochastic weight averaging for robust generative model training.

Related Post: How to implement efficient model compression techniques

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP