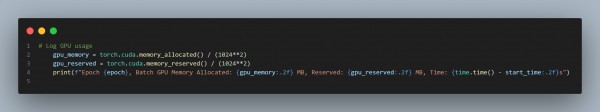

Here is the script below that you can refer, to profile GPU usage during training of a generative model in PyTorch:

In the above script, we are using Memory Profiling, which uses a torch.cuda.memory_allocated() and torch.cuda.memory_reserved() to monitor GPU memory usage, Training Monitoring, which logs GPU metrics during each batch, and Scalability that adapts for real-world datasets and larger models.

Hence, this script helps track GPU memory usage and time per batch during training.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP