To increase the interpretability of outputs in a deep learning-based generative model, consider the following methods:

- Latent Space Visualization: Use dimensionality reduction techniques like t-SNE or PCA to visualize the latent space and understand how different features affect generation.

- Feature Attribution: Implement techniques like Grad-CAM or Integrated Gradients to highlight which parts of the input influence the generated output.

- Disentangled Representations: Use models like Beta-VAE or InfoGAN to disentangle factors of variation in the latent space, making it easier to interpret each dimension.

- Conditional Generative Models: Use conditional GANs or VAEs to generate outputs based on interpretable conditions (e.g., class labels, attributes) to understand how each condition affects the output.

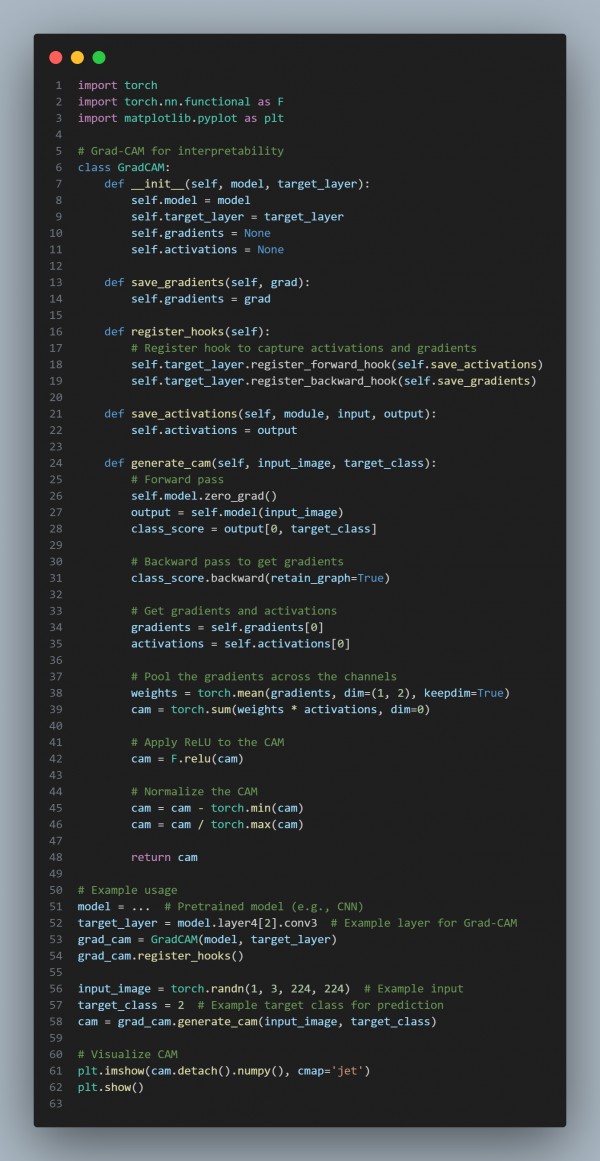

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

- Latent Space Visualization: Helps understand how different latent features correlate with generated outputs.

- Grad-CAM: Visualizes which parts of the input image influence the output, enhancing transparency.

- Disentangled Representations: Facilitates the interpretation of individual latent space dimensions.

- Conditional Models: Allows for explicit control over generated outputs, making them more interpretable.

Hence, by referring to the above, you can increase the interpretability of outputs in a deep learning-based generative model.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP