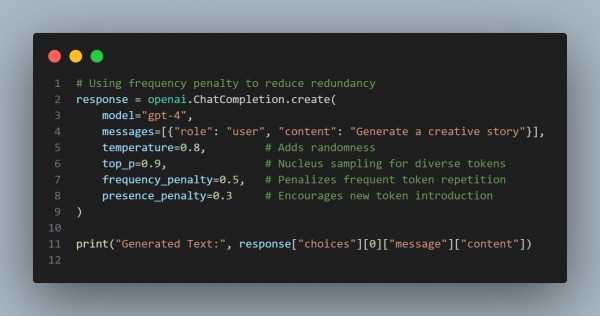

Techniques that will help you address token redundancy issues in high-dimensional text are n-gram blocking, frequency penalties, and diversity-promoting sampling (e.g., nucleus sampling), which reduces repetitive patterns in high-dimensional text generation tasks.

We have used the above Frequency Penalty to reduce the likelihood of reusing tokens that appear frequently. The Presence Penalty discourages repeating already-used tokens in the same context. N-gram blocking explicitly prevents the model from generating repetitive n-grams during decoding (common in seq2seq models).

These techniques improve coherence and diversity in the generated text.

Hence, using these techniques, you can address token redundancy issues in high-dimensional text generation tasks.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP