The methods that are used to implement layer normalization in transformer architectures for stability are as follows:

- Normalize Activations: Compute mean and variance across features, then scale and shift.

- Apply Learnable Parameters: Use learnable scale (γ\gamma) and shift (β\beta).

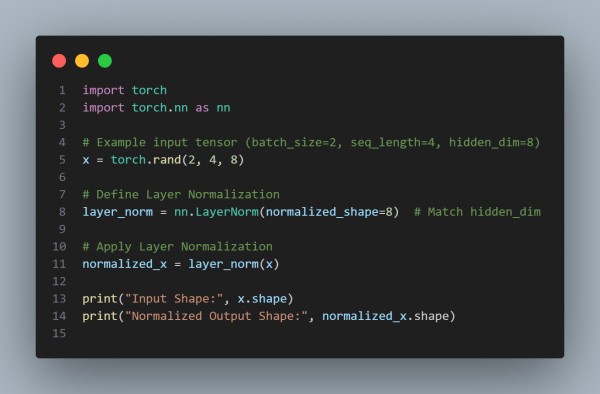

Here is the code snippet you can refer to:

The above is used to stabilize training dynamics, speed up convergence, and is applied after self-attention and feedforward sub-layers in Transformers.

Hence, by referring to the code above, you can implement layer normalization in transformer architectures for stability.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP