In order to improve multi-turn dialogue coherence in conversational AI using transformers, you can refer to the techniques below:

- Context Window Expansion: It concatenates previous turns in the input to maintain context.

- Memory Networks: It uses a memory mechanism to store and retrieve relevant dialogue history.

- Fine-Tuning on Dialogue Data: It helps in Training the model on high-quality multi-turn dialogue datasets.

- Persona Integration: It incorporates user and bot personas for consistency.

- Reinforcement Learning (RLHF): Fine-tune responses using human feedback or reward models.

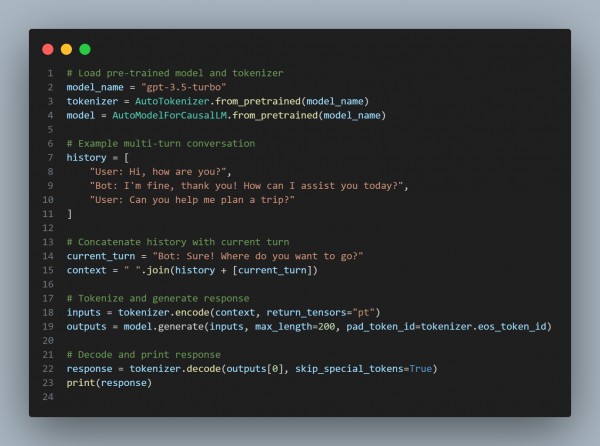

Here is the code snippet you can refer to:

In the above code, we are using Expanded Context to incorporate history into inputs to improve coherence, Fine-tuning to train on domain-specific, multi-turn data to boost relevance, and RLHF to optimize for logical, human-like responses.

Hence, these techniques will improve multi-turn dialogue coherence in conversational AI using transformers.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP