You can perform rank tuning in QLoRA for a large language model by sweeping over different r values in LoraConfig and evaluating model performance on a validation set.

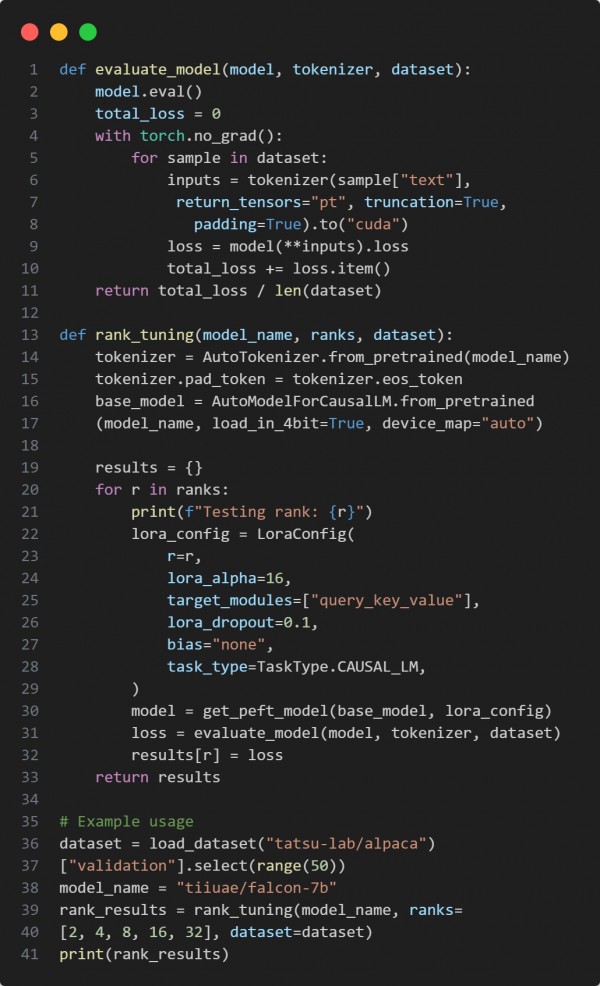

Here is the code snippet you can refer to:

In the above code we are using the following key strategies:

-

Dynamically tests multiple ranks (r) to optimize parameter efficiency.

-

Evaluates validation loss for performance comparison.

-

Enables trade-off tuning between compute cost and accuracy.

Hence, rank tuning in QLoRA allows strategic balancing of adaptation capacity and resource usage, optimizing large model fine-tuning across different deployment needs.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP