The attention mechanism can have different layer sizes by using projection layers of varying dimensions for Query, Key, and Value transformations.

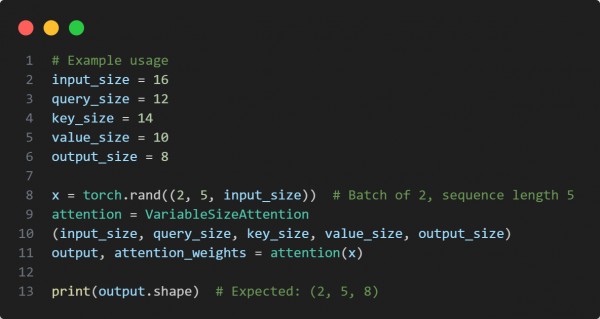

Here is the code snippet you can refer to:

In the above code snippets we are using the following techniques:

- Implements an attention mechanism with varying Query, Key, and Value sizes.

- Uses nn.Linear layers to map inputs to different-sized projections.

- Applies scaled dot-product attention with softmax for normalization.

- Uses an additional Linear layer to project the context vector to an output size.

- Ensures flexibility in attention design by allowing different layer sizes.

Hence, attention mechanisms with different layer sizes provide more architectural flexibility, optimizing representation learning for specific tasks.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP