Output distortion in GPT-2 models for technical articles can be prevented by fine-tuning on domain-specific data, using controlled decoding methods like temperature and top-k sampling, and ensuring prompt clarity.

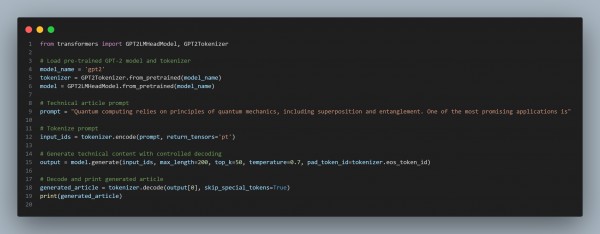

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Uses a well-structured technical prompt to guide coherent generation.

- Applies top-k sampling and temperature control to balance creativity and accuracy.

- Ensures clean output by skipping special tokens and limiting output length.

Hence, combining domain-specific prompts, controlled decoding, and careful model configuration reduces output distortion, improving the clarity and accuracy of technical article generation.

Related Post: GPT models in low-latency environments

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP