Lack of coherence in generated dialogue can be addressed by fine-tuning on high-quality conversational data, using a sliding context window, applying nucleus sampling, and ensuring proper prompt engineering.

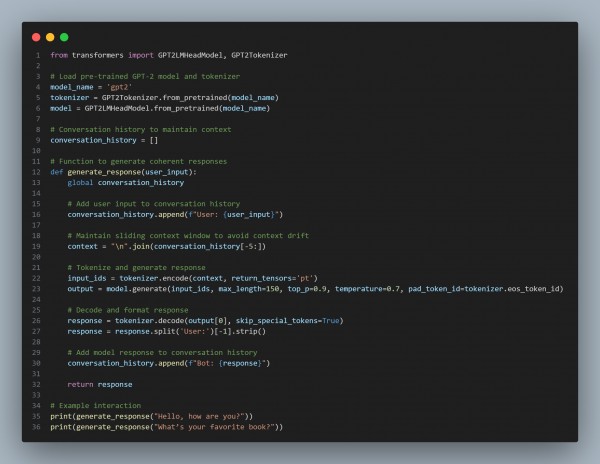

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Maintains conversation history to provide consistent context.

- Uses a sliding context window to prevent coherence loss over long dialogues.

- Applies top-p (nucleus) sampling and temperature control for balanced and fluid responses.

Hence, by managing context history, tuning decoding strategies, and structuring prompts effectively, we enhance the coherence and flow of model-generated dialogue.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP