Output fluency in text summarization can be improved by fine-tuning on high-quality, well-structured datasets, using beam search or top-k sampling, and applying length penalties or repetition penalties.

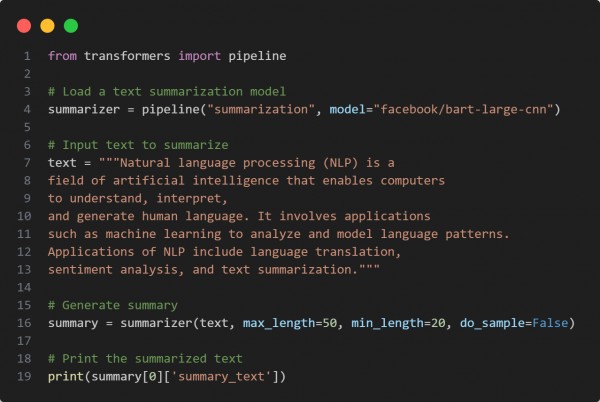

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- Uses the BART model fine-tuned for summarization, known for fluent text generation.

- Applies beam search to improve fluency and coherence of the output.

- Uses length penalty and early stopping to avoid overly short or repetitive summaries.

Hence, by combining high-quality models, advanced decoding strategies, and careful tuning of parameters, we improve the fluency and readability of automatically generated text summaries.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP