You can handle overfitting in Decision Trees by setting max_depth to limit tree depth and min_samples_split to control the minimum samples needed to split a node.

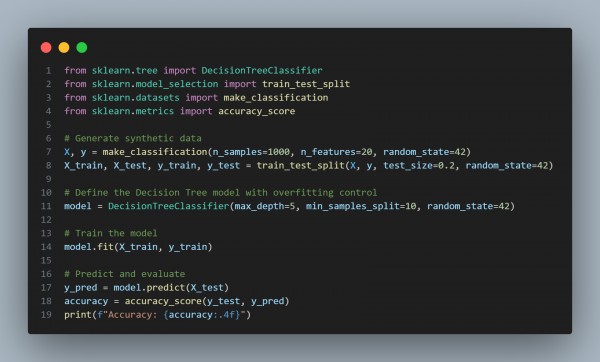

Here is the code snippet you can refer to:

In the above code we are using the following key points:

- max_depth=5 limits the depth of the tree, reducing complexity and overfitting risk.

- min_samples_split=10 ensures that a node must have at least 10 samples to split, preventing overfitting on small data subsets.

- random_state=42 ensures reproducibility of results.

- accuracy_score() evaluates model performance on test data.

Hence, controlling max_depth and min_samples_split balances model complexity and generalization, effectively reducing the risk of overfitting in Decision Trees.

Related Post: How to handle overfitting in large generative models

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP