The best way to implement early stopping in Keras is by using the EarlyStopping callback, which monitors validation loss (val_loss) and stops training when it stops improving to prevent overfitting.

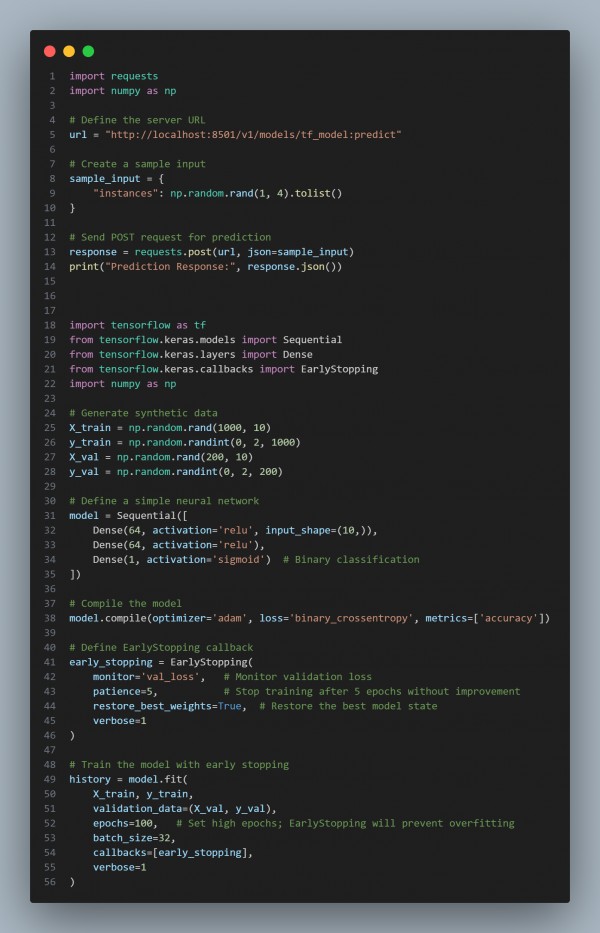

Here is the code snippet you can refer to:

In the above code we are using the following key approaches:

-

Synthetic Data Generation:

- Creates random training and validation data for demonstration.

-

Neural Network Model:

- A simple fully connected network for binary classification.

-

EarlyStopping Callback Implementation:

- Monitors validation loss (val_loss).

- Patience = 5: Stops training if no improvement in 5 consecutive epochs.

- Restores best weights: Ensures the best model is retained.

-

Prevention of Overfitting:

- Training stops automatically once the model stops improving, avoiding unnecessary computation.

Hence, EarlyStopping in Keras optimally prevents overfitting by stopping training when validation loss ceases to improve, ensuring better generalization and saving computation time.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP