To deploy a trained deep learning model with TensorFlow Serving, export the model in SavedModel format, run TensorFlow Serving as a REST or gRPC server, and send inference requests via an API client.

Here is the steps you can follow:

- 1. Train & Save a Model in SavedModel Format

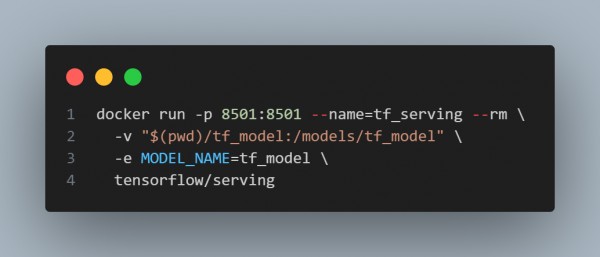

- 2. Start TensorFlow Serving Locally

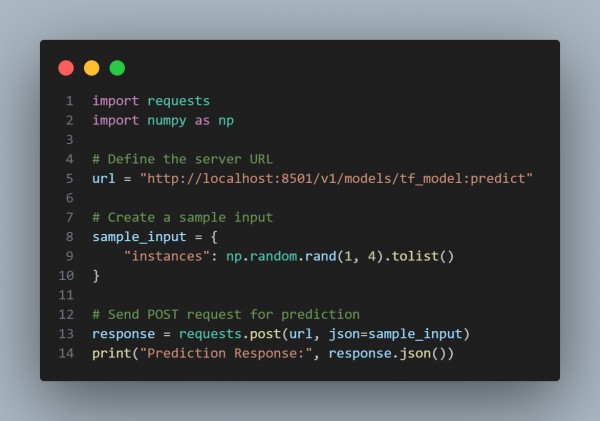

- 3. Send a Prediction Request Using Python

In the above code we are using the following key approaches:

-

Model Training & Exporting:

- A Keras model is trained and saved in SavedModel format for TensorFlow Serving.

-

Running TensorFlow Serving:

- The model is served using Docker with TensorFlow Serving, exposing a REST API.

-

Making Real-Time Predictions:

- Uses a POST request to send inference data and get predictions from the deployed model.

-

Scalability & Production Readiness:

- Supports batch requests, gRPC, and RESTful API for scalable deployment.

Hence, TensorFlow Serving enables fast, scalable, and real-time deployment of deep learning models via REST and gRPC, making it ideal for production environments.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP